Kian Zohoury

Welcome to my personal blog, where I showcase my projects and talk about AI & machine learning.

Below is a list of recent projects. Corresponding code for each entry can be accessed through the provided Github link.

conditional VAEs for generating handwritten digits (MNIST)

Exploring conditional variational autoencoders (CVAEs) for guided image generation of handwritten digits, using the famous MNIST dataset.

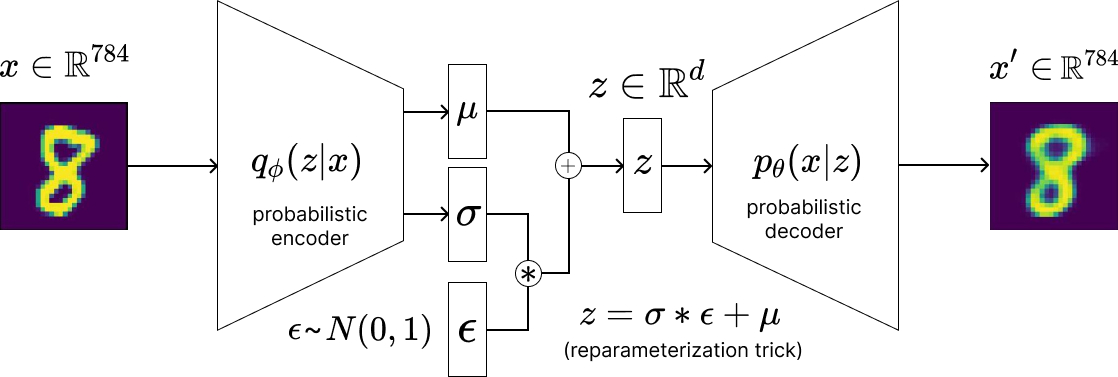

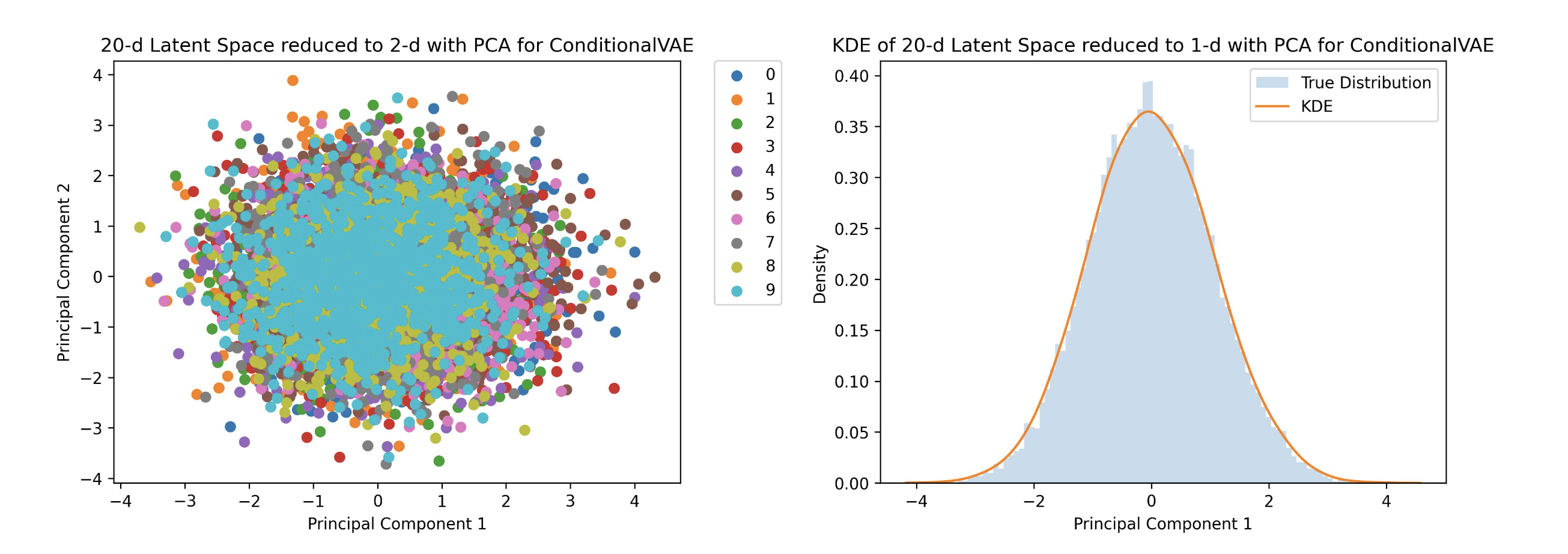

While VAEs are not entirely new, they are pivotal in understanding how variational inference allows us to approximate posterior distributions. For this reason, VAEs are abundantly used (over vanilla autoencoders) in latent diffusion models. I provide an in-depth comparative analysis of vanilla autoencoders, VAEs, and CVAEs, observing properties of latent spaces generated by deterministic and probabilistic encoders. Dimensionality reductions techniques like principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE) are used for visualizing d-dimensional latent spaces and visualizing high-dimensional pixel spaces. Kernel density estimates (KDEs) are also used to visually assess whether latent distributions appear univariate/bivariate normal.

Source code can be found here.

topics: deep learning, image processing, variational inference

auralflow 🔊🎵

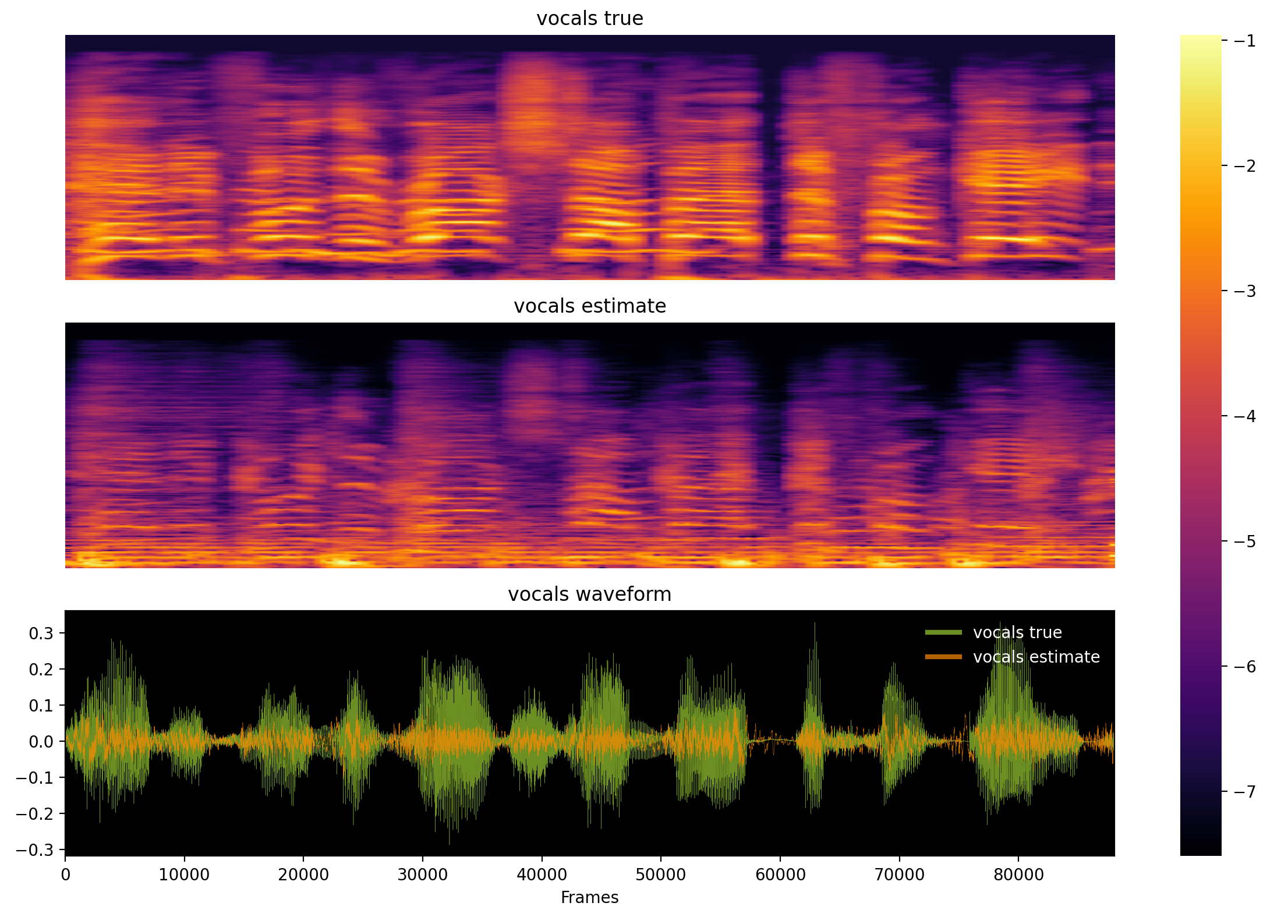

PyTorch-based application for training/evaluating source separation models to extract stems (e.g. vocals) from audio for music remixing.

As someone who previously pursue music production for a living, I was constantly on the search for studio-quality acapellas (vocals only) for song remixing. While several models existed at the time (e.g. DEMUCS, Open-Unmix), there weren’t any modeling toolkits available for customizing such models. To aid my own model development and training/evaluation workflows, I conveniently designed a package to have the following:

- pre-trained source separators (end-to-end audio generation)

- dataset classes designed for efficient audio data chunking

- audio-tailored loss functions (e.g. component loss, Si-SDR loss, etc.)

- models wrappers with built-in pre/post audio processing methods (e.g. STFT, phase approximation, etc.)

- high-level object-orientated model trainer (similar to Lightning)

- visualization tools for audio and spectrogram representations

- GPU-accelerated MIR evaluation (e.g. Si-SDR, Si-SNR, etc.)

- source separation of large audio folders

The project is not currently being maintained, but I plan on resuming development soon. For the source code, go here

topics: deep learning, signal processing, audio

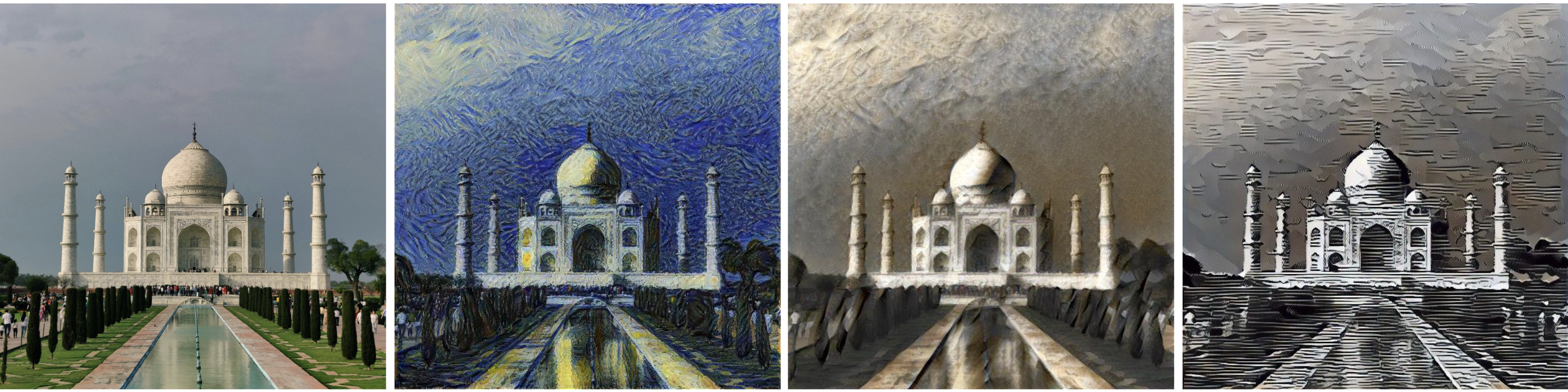

neural style transfer 🎨 📷

PyTorch-based implementations (from scratch) of several distinct deep learning approaches (optimization, transformation networks, CycleGAN) that aim to solve a popular problem in computer vision called style transfer.

Put simply, the task in style transfer is to generate an image that preserves the content of image x (i.e. semantics, shapes, edges, etc.) while matching the style of image y (i.e. textures, patterns, color, etc.). One may ask: what is the correct balance between content and style? As it turns out, the answer is more subjective than typical optimization/ML problems - “beauty is in the eye’s of the beholder”, as they say.

For the source code, go here.

topics: deep learning, image processing, generative adversarial networks

ecoshopper ♻️ 🛒

AI-powered recycling assistant.

Ecoshopper was a prototype mobile web application designed as part of a final project for the Summer 2021 iteration of UC Berkeley’s CS 160. The core ML design of the app involved a pretrained VGG16 backbone that served as a rich feature extractor for transfer learning. Using Stanford’s TrashNet dataset along with human annotation, a downstream classifier was trained to identify recylable goods from non-recyclable goods.

Model implementation and deployment were done with PyTorch and Django, and front-end development was done with React Native. For the source code, go here.

topics: deep learning, image classification, app development